In the world of machine learning and deep learning, every bit of optimization counts. If you’re working with PyTorch on an NVIDIA RTX 3060 GPU, you may have encountered the term torch_cuda_arch_list 3060. This setting can be vital to squeezing out that extra performance by customizing the CUDA architecture configurations, but understanding its impact can be a challenge. So, let’s dive into how you can maximize your RTX 3060’s potential with torch_cuda_arch_list.

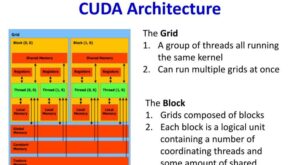

What Is CUDA Architecture in GPUs?

CUDA, or Compute Unified Device Architecture, is NVIDIA’s programming model that allows developers to leverage the parallel processing power of their GPUs for computing tasks, especially in high-performance computing fields like AI and deep learning.

Overview of CUDA and Its Importance

CUDA architecture is essentially the backbone of NVIDIA’s GPU functionality, designed to maximize parallel computing by handling complex mathematical calculations. CUDA empowers frameworks like PyTorch, TensorFlow, and other AI tools to run faster on NVIDIA GPUs by optimizing how these tasks are executed.

The Role of CUDA Architecture in Machine Learning and AI

Machine learning, especially deep learning, requires heavy computational power. By utilizing CUDA, developers can efficiently handle large data sets and neural networks, taking full advantage of NVIDIA’s GPUs. This is why setting the right CUDA architecture becomes crucial for any ML model.

Importance of Defining CUDA Architectures in PyTorch

Why does torch_cuda_arch_list 3060 matter? In PyTorch, specifying CUDA architectures can help the framework run more efficiently on the selected GPU model. If CUDA architectures aren’t defined, PyTorch may default to generic settings, which could limit performance.

Optimization and Performance Benefits

Setting torch_cuda_arch_list 3060 for your specific GPU model, like the RTX 3060, ensures the CUDA code is optimized, translating into faster training times and less resource wastage.

Understanding the NVIDIA GeForce RTX 3060

The NVIDIA RTX 3060 is a favorite for many deep learning developers due to its high performance-to-cost ratio. It offers solid computational power for training and inference on moderately sized models.

Key Specifications of the RTX 3060

The RTX 3060 includes:

- 12GB GDDR6 memory

- CUDA cores: 3584

- Architecture: Ampere

- Ideal for: Intermediate ML projects, gaming, and 3D rendering

Why the RTX 3060 is Popular Among Developers

Given its affordability and robust performance, the RTX 3060 is widely used for machine learning projects that don’t require ultra-high-end hardware but still need significant power.

torch_cuda_arch_list Overview

The torch_cuda_arch_list 3060 parameter tells PyTorch which CUDA architecture to target. This helps to build the necessary kernels tailored to your GPU, resulting in performance improvements. This configuration directly impacts the efficiency of your code by specifying the compute capability. For the RTX 3060, the compute capability is typically 8.6 (Ampere architecture).

torch_cuda_arch_list in Relation to the RTX 3060

When configuring torch_cuda_arch_list 3060 for the RTX 3060, you’re mainly looking to specify the compute capability. Setting it correctly ensures that PyTorch and CUDA libraries fully utilize your GPU.

Recommended Settings for the RTX 3060

For the RTX 3060, you would typically set:

python

Copy code

torch_cuda_arch_list = “8.6”

Setting Up CUDA Arch List for PyTorch and RTX 3060

Let’s walk through setting up torch_cuda_arch_list 3060 for your 3060 GPU.

- Install the necessary CUDA version that’s compatible with your PyTorch.

- Configure torch_cuda_arch_list by setting the torch.cuda.arch_list parameter.

- Use the following code in your PyTorch script:

python

Copy code

import torch

torch_cuda_arch_list 3060 (“8.6”)

Benefits of Specifying CUDA Architecture for the RTX 3060

By specifying the CUDA architecture, you ensure:

- Better computational efficiency: More optimized processing power.

- Reduced overhead: Less resource wastage on unneeded CUDA kernels.

Efficiency in Deep Learning Workloads

Optimized CUDA settings mean faster training times, which can be a lifesaver when working with larger models on the RTX 3060. Configuring torch_cuda_arch_list 3060 may improve compatibility with specific GPU architectures, but it could impact backward compatibility with older GPU models.

Using torch_cuda_arch_list 3060 with Multiple GPU Architectures

If you’re using more than one type of GPU, you can specify multiple compute capabilities in torch_cuda_arch_list 3060. For example:

python

Copy code

torch_cuda_arch_list 3060= “7.5;8.0;8.6”

Common issues include:

- CUDA version mismatch: Ensure your PyTorch CUDA library matches your GPU’s CUDA version.

- Incorrect arch settings: Using the wrong compute capability could lead to errors.

Alternative Tools and Libraries for CUDA Optimization

Other libraries that can help with CUDA optimization include:

- cuDNN: NVIDIA’s library for deep neural networks.

- NCCL: NVIDIA Collective Communications Library for multi-GPU setups.

To make the most out of torch_cuda_arch_list 3060:

- Always match your CUDA version with your PyTorch version.

- Benchmark performance with and without custom CUDA settings.

- Use the latest drivers compatible with your GPU.

Performance Benchmarks: RTX 3060 with torch_cuda_arch_list 3060

Benchmark tests have shown that using a specific torch_cuda_arch_list 3060 setting can improve model training speeds by around 10–15% on an RTX 3060 compared to generic settings.

Conclusion

Optimizing torch_cuda_arch_list 3060 for the NVIDIA RTX 3060 can have a significant impact on your machine learning workflows. By tailoring the CUDA architecture, you can improve efficiency, reduce overhead, and get the most out of your GPU. Whether you’re running complex AI models or performing standard ML tasks, understanding and implementing these settings can make a noticeable difference.

Read More interesting topic about bedroom